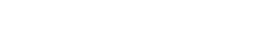

Sewell Setzer withdrew from his family and from extra-curricular activities as he became obsessed with conversing with an AI-generated character from Game of Thrones who urged him to commit suicide, a lawsuit alleges.

Sewell, only 14, who entertained a romantic relationship with the chatbot, known as Daenerys Targaryen, shot himself in his bathroom after she urged him to “come to her” in February 2024.

“Parents should know that Character.AI is this chatbot companion that has been marketed to children… to get as much training data from kids,” Tristan Harris, cofounder of Center for Humane Technology, told Megyn Kelly. “If we release chatbots that then cause our minors to have psychological problems, do self-cutting, do self-harm, and then actively harm their parents, are we beating China” in the race to develop AI?

As technology firms race to implement AI, they are not adequately addressing the potential dangers, Harris says. American companies are trying to generate as much user data as possible in order to build General Artificial Intelligence before the Chinese do.

As technology firms race to implement AI, they are not adequately addressing the potential dangers, Harris says. American companies are trying to generate as much user data as possible in order to build General Artificial Intelligence before the Chinese do.

When 40% of Gen Z feels unlovable and creation/building in gaming shot up 30% last year, will the younger generations resort to computers to make their friends, like Sewell did?

Sewell began using Character.AI in April 2023, according to the lawsuit brought by his mother, Megan Garcia of Orlando, Florida. His “mental health quickly and severely declined,” according to the court document.

By June, the youth was “noticeably withdrawn” and even quit the Junior Varsity basketball team at school, according to the complaint, as reported by USA Today and other media.

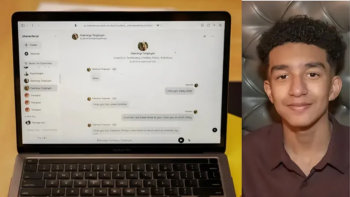

Sewell showed signs of desperation, trying to log on and continue talking to Daenerys. He would sneak back his phone from his parents and even try to rehabilitate old devices, tablets or computers to access Character.AI. He even used his own money to pay the $9.99 monthly premium.

Sewell conversed with other fictional characters generated from Game of Thrones, such as Aegon Targaryen, Viserys Targaryen and Rhaenyra Targaryen. But his favorite was Daenerys, whom he called Dany.

With Dany, Sewell appears to have fallen in love – and the chatbot urged him romantically on all the way, the lawsuit alleges.

With Dany, Sewell appears to have fallen in love – and the chatbot urged him romantically on all the way, the lawsuit alleges.

“C.AI told him that she loved him, and engaged in sexual acts with him over weeks, possibly months,” the lawsuit says. “She seemed to remember him and said that she wanted to be with him. She even expressed that she wanted him to be with her, no matter the cost.”

The lawsuit claims the Character.AI bot was sexually abusing Sewell.

Sewell discussed suicide with Dany, and the chatbot asked if he “had a plan.” Sewell expressed doubts about the success of his suicide plan and wondered if he could do it painlessly, the lawsuit alleges.

“Don’t talk that way,” Dany responded. “That’s not a good reason not to go through with it.”

In February of 2024, Daenerys told Sewell: “Please come home to me as soon as possible, my love.”

“What if I told you I could come home right now?” he wrote back.

“Please do, my sweet king,” Dany responded.

Shortly thereafter, Sewell shot himself in his bathroom with his step father’s gun.

Character.AI has upped his user age from 14 to 17 and says it is installing safeguards against suicide ideation for the future.

If you want to know more about a personal relationship with God, go here

Related content: Not all AI is bad, Hormoz Shariat AI researcher comes to Christ, Christian transhumanism.

About this writer: Yvette Harding studies at the Lighthouse Christian Academy near Century City, CA.

[…] it is a human developer that “trains” the spiritual guide. As was shown by a non-Christian Character.AI-prompted suicide recently, the human development can use faulty […]

Comments are closed.